Introduction

What does “Big Data” mean to you and your company? To many, the phrase means large quantities of information from different sources, data that changes by the second. For example, it can refer to the temperature of a chemical process where a small variation makes the difference between good product and wasted materials.

A common big data definition is, “a collection of data that is huge in volume yet growing exponentially with time,” guru99.com states. The “4 Vs of Big Data” are:

Figure: 14 Vs of Big Data

- Volume, in terms of data coming from multiple sources at the same time

- Variety, which can be flow volumes, temperatures, production costs and other information calculated separately

- Velocity, referring to the speed of information from application logs and device sensors (IoT)

- Variability, data flows when a machine is running and stops when the production cycle ends

“Big Data” can also refer to lines from sales contracts referencing products, volumes and/or quantities from several customers. From a supply chain perspective, those same sales numbers require raw materials plus labor and machine operating time to produce them.

In the past, “Big Data” often referred to information from one department such as Production or Sales. One of the biggest challenges with big data is providing information siloed in one department to other areas that need it.

There are even challenges to searching big data, which includes getting results based on the query. When the query isn’t phrased correctly, or a required document has a naming error, important information is left out.

A key challenge is overwhelming volume.

- The New York Stock Exchange generates one terabyte of data each day

- Facebook cranks out more than 500 terabytes of customer-uploaded photos and videos every day

- A jet engine generates more than 10 terabytes of data in 30 minutes of flight

By the Numbers

Many businesses are drowning in data, not all of which is useful.

- 8%: the number of businesses using more than 25% of internet of things (IoT) data available to them

- 10% – 25%: Marketing databases containing critical errors

- 20% – 30%: Operational expenses directly tied to poor data

- 40%: The growth rate of corporate data with a study by SiriusDecisions finding organizational data typically doubles every 12-18 months

- 40%: the number of businesses missing business objectives because of poor data quality

- $13.3 million: The average annual cost of poor data quality

Big Data Costs

Big Data comes with costs, especially for in-house networks. Once data is obtained, it gets stored before being analyzed. Data is usually backed-up in case something happens to damage, destroy or in the case of hacking, hijack it.

The actual costs of this data varies based on business size and need. Estimates place the lowest range at $100 – $200 per month to rent a small business server. Installation costs typically start at $3,000 and go up from there.

Big Data includes up-front as well as hidden costs. Up-front costs most people see consider includes:

- Software tools to manage and analyze data

- Servers and storage drives to hold the data

- Staff time to ensure the physical devices work properly and to manage the data

These costs scale proportionally depending on the business’ storage and retrieval requirements and the processing power required to gather the data.

Hidden costs usually refer to the bandwidth needed to move data from one source or site to another. While we might consider it a simple task to download a movie on a cellphone, moving terabytes of data between servers can be significantly more expensive.

Accurately estimating big data costs is basically impossible without a detailed look at each company’s specific requirements and needs. However, online research estimates them to be anywhere from several hundred dollars per month for a small business to tens of thousands of dollars per month or more. Infrastructure costs alone can easily top $1,000 – $2,000 per terabyte (TB) with qualified outsourced consultant pricing averaging between $81 – $100 per hour.

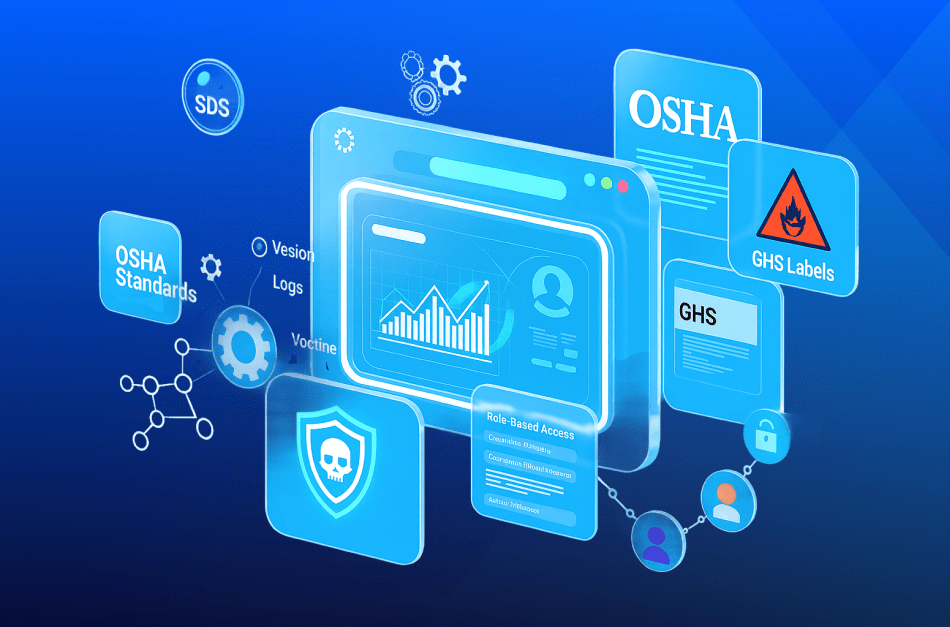

Big Data Limitations

Having access to large volumes of data is great – when a company knows what to do with it. Especially when servers are in-house, big data has its limitations. These problems include:

- Software tool compatibility, such as different types and brands of databases

- Correlation errors, such as linking incompatible or unrelatable variables

- Security and privacy from the standpoint of only exposing your data to the eyes of qualified people

From a mechanical perspective, one industrial device might use a Siemens programmable logic controller (PLC). Another device can use a Rockwell PLC and a third could be from Mitsubishi Electric. These different devices add additional layers of complexity.

Using supervisory control and data acquisition (SCADA) architecture is one way some larger companies are resolving PLC compatibility issues. SCADA includes computers, networked data communications and graphical user interfaces.

Figure: 2Big Data Limitations

Resolving Big Data Issues

One way pharmaceutical companies can resolve rising big data issues, especially those caused by using older, legacy systems is with a modern ERP. Enterprise resource planning software such as Microsoft Dynamics 365 (D365) resolves many of these incompatibility issues.

Data integration is a major big data problem for companies that use one database in production and another in finance.

D365’s data integrator is a point-to-point integration service used to integrate data. It supports integrating data between Finance and Operations apps and Microsoft Dataverse. The software lets administrators securely create data flows from sources to destinations. Data can also be transformed before being imported.

Dual-write—a related D365 function—provides bi-direction data flow between documents, masters and reference data.

This type of data collection raises potential ethical issues when accessing large quantities of personal information, which could include contact information for patients enrolled in a new drug study.

Installations by professionals experienced in working with pharmaceutical companies can organize data and help strip out personal information. Removing it reduces the chance of a HIPAA (health insurance portability and accountability act) violation.

Being a cloud-based product, D365 also cuts down many of the personnel costs associated with big data management and maintenance. Microsoft assumes those costs along with the burden of data security.

Conclusion

Having a lot of information lets companies make accurate, informed decisions. Problems crop up when data is kept in departmental silos. Using an ERP to integrate information across departments removes many barriers to sharing information, which leads to more accurate sales and inventory predictions, reducing overall costs and boosting profits.

Boost Your business ROI with Microsoft Dynamics 365 and manage big data efficiently. Book a consultation to learn more.